QATC Survey Results

This article details the results of the most recent QATC quarterly survey on critical quality assurance and training topics. About 70 contact center professionals representing a wide variety of operations provided insight regarding the quality assurance tools and processes in their centers.

Participant Profile

The largest number of participants is from call center operations with between 101 and 200 agents. However, the balance is widely dispersed across all ranges. This mix of respondents repressents a broad spectrum of call center sizes. Participants from financial, healthcare, utility, insurance, and “other” industries have the largest representation but there are respondents from a wide variety of industries.

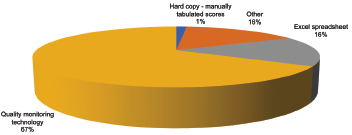

Technology or Format Used for QA

Respondents were asked what technology or format is used for their quality monitoring evaluations. Two-thirds indicated that they use a quality monitoring technology. Sixteen percent each chose Excel spreadsheets or some other technology/format. Only 1% indicated the use of hard copy and manually tabulated scores. Tracking and managing the evaluations is a time-consuming task but it is critical that it be as accurate and timely as possible. While there appears to be a significant use of commercial technologies, there are still many using other methods.

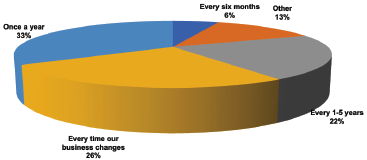

Frequency of Form Updates

Respondents were asked how often quality monitoring forms are updated. One-third of the respondents indicated it is done once per year while 26% indicated it is done whenever the business changes. Twenty-two percent indicated it is updated between 1 and 5 years. It is important to the credibility and usefulness of the process that the form capture the most important elements of the contacts. The need for updates is driven in part by the dynamic nature of the types of contacts and process changes the organization experiences.

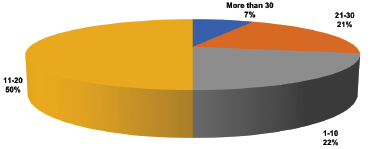

Number of Questions/Statements on the Forms

Respondents were asked how many questions and/or statements are on the quality evaluation form. Half of the respondents indicated that they have between 10 and 20 items on their forms. Only 7% indicated they have more than 30 items, but the remaining 43% are evenly split between less than 10 items and 21 to 30. Balancing the focus on the most important qualities of the contact and capturing enough detail to guide coaching is challenging. Too few items make it difficult to identify the root cause of problems and may provide little coachable content, but too many can waste time for the analyst and dilute the importance of the critical items.

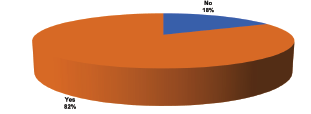

Weighting of Items

Survey participants were asked if their questions and/or statements are weighted. Over 80% indicated that they do weight some items to give more value to the most important items. However, 18% do not provide weighting, suggesting that all the items evaluated are of equal importance to the organization.

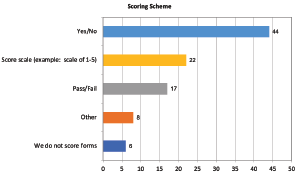

Scoring Scheme

Respondents were asked what scoring scheme they utilize on their forms. Multiple choices were accepted. The most frequently chosen were the binary choices of Yes/No or Pass/Fail. Approximately one-third of the respondents indicated they use a scale such as 1 to 5. Only 6 do not score their forms.

There are interesting arguments in the industry regarding scoring. Some say that scoring such as excellent, meets requirements, and needs improvement are helpful, especially for new hires. Scale scores are the most challenging to calibrate and the larger the range of the scale the harder it is. However, some items (such as soft skills) defy a simple binary choice and may be achieved to some degree or another. Using more than one scoring option for the items on a single form may provide more useful information than having only one option. Not scoring can take the focus on goal numbers out of the picture and put the emphasis on the text provided to support coaching efforts. It is important to keep in mind the purpose of the QA evaluation and make sure the data provided and the processes support that purpose.

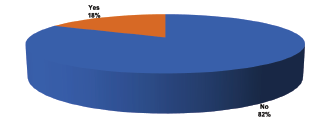

Bonus Points for Excellence

The respondents were asked if they award bonus points for exceptional performance. Only 18% indicated that they do have bonus points. While there may not be a defined bonus system within the QA evaluation process, it is important to identify and reward excellence. Rewarded behavior is repeated behavior.

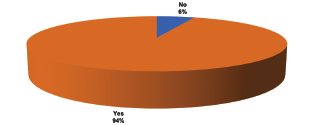

Comment Section

Respondents were asked if there is an area on the form for the evaluator to write comments and/or suggestions. Only 6% indicated they do not have a place for this on the forms. This is especially important when the binary choices are used as yes/no or pass/fail may not tell the whole story. These text items are very useful to the coach who will discuss the evaluation with the agent as well.

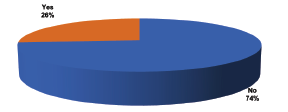

After-Call Work Evaluation

Respondents were asked if they include evaluation of the after-call work (ACW) on their quality monitoring form. Only one-quarter (26%) indicated that they do evaluate the ACW. There is a wealth of opportunity for process improvement in the ACW as it is often where the promises to the caller are kept, and the work done as agreed. If the data entry is inaccurate or the form isn’t sent, for example, the caller will probably need to call back, which not only leads to customer dissatisfaction but impacts the workload and efficiency of the center. Not including ACW in the QA evaluation may be a function of a technical limitation, but where it is possible, it can be a great source of process improvement ideas as well as agent coaching opportunities.

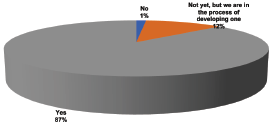

Quality Definitions Document

Respondents were asked if they have a quality definitions document that describes and gives examples of each question on the QA form. Twelve percent indicated that they are in the process of developing a form with 1% indicating they do not have this type of document. The remaining 87% indicated that they do have this important document. It is critical to the calibration process to have clear definitions of what is expected to achieve success on each item on the QA form. It is also a great aid in coaching where examples can be provided to the agent to aid their understanding of how to improve their performance.

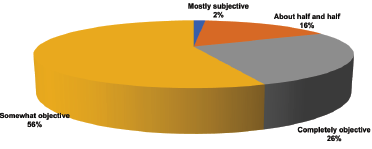

Objectivity of the Form

Respondents were asked to give their opinion regarding the objectivity of their QA form (behavior based). The responses are quite dispersed. Approximately one-quarter (26%) believe their form is objective, while over half (56%) think it is somewhat objective. Another 16% believe it is about half and half, but only 2% think their form is totally subjective. This is another opportunity for the QA team to review their forms and questions. It is very difficult to calibrate and even harder to explain the scores to agents if the question can have a score that varies depending on who did the evaluation.

When asked for free-form text comments about the objectivity of their form/process, the most frequent responses include:

- Soft skills can be hard to measure. Some of our team puts too much emphasis on dead air.

- We reviewed behaviors and ensured these were based on quantifiable, measurable components we could all agree on.

- Our form also includes sections for Auto Fail (critical infractions) and Auto Zero (catastrophic infractions).

- Our team reviews our Criteria Guide every quarter to ensure it is in alignment with our current processes.

- We have implemented an AI evaluation process which has required all questions be objective for the computer to be able to score them.

- Our form is split 60/40. 60% is objective based on policies and procedures. 40% is based on the experience provided which aligns with the mission and values of our organization.

Summary

This survey provides some insight into the Quality Assurance evaluation forms and their use. For many, the technologies and processes are mature while others are just getting started. Scoring processes vary and achieving a completely objective set of evaluation items is elusive. Quality monitoring and effective feedback is critical to agent development and achieving customer satisfaction. Looking for ways to improve processes throughout the organization can be even more important than focusing only on the contact center activities. As we know, the contact center will interact with more customers in a day than the other departments might in a year. Mining the information from the interactions for product development, marketing, and process improvements throughout the company can raise the value of the contact center to the entire organization.

We hope you will complete the next survey, which will be available online soon.