The Essential Guide to Auto-QA

By Jamie Scott, CEO and Co - Founder, EvaluAgent

We wrote The Essential Guide to Auto-QA because we’ve seen too many organizations fail to realize the benefits that this game changing technology can have.

While speech and text analytics tools are powerful, they don’t produce the desired results and conversational insight without effort. That effort must come from the people who understand the business best, rather than the analytics vendor or a third-party consultant.

Read on to discover:

- The best use cases for Automated Quality Assurance

- What can be automated (and what can’t)

- The best ways to deploy the technology in your business

The Link Between Conversation Analytics & Auto-QA

Do I need another shiny toy?

Conversation Analytics is far from new – in fact, our founders at EvaluAgent remember its earliest applications in QA more than 15 years ago – but recently, analytics has made a bold resurgence. However, that resurgence has come with problems. Conversation Analytics (a catch-all term for the technology) is now celebrated as a fix-all remedy for contact center performance: a replacement for your insights or coaching teams and a way to automatically performance-manage your valuable contact center agent.

In reality, neither is true so in this guide, we will focus on the potential benefits, challenges, and opportunities for using Conversation Analytics to automated your existing quality assurance processes.

So, how can Auto-QA transform your contact center?

AUGMENT

Quality & Compliance

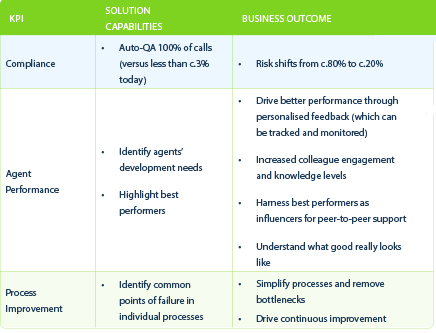

Here’s how Auto-QA can be harnessed to drive better outcomes across three critical KPIs: compliance, agent performance, and process improvement.

Augment or Automate

So should I sack my QA team now?

We’ve now reached the biggest misconception: that an Auto-QA platform can somehow replace a contact center’s highly skilled Quality Assurance team. This is absolutely untrue!

A business investing in an Auto-QA platform is no threat to a highly-skilled Quality Assurance team. In fact, it’s the absolute opposite. By making correct use of its benefits, QA teams can hugely increase their efficiency and effectiveness. It’s all about finding the right balance…

Choosing the Best of Both Worlds

Yes, the benefits of Auto-QA are vast, but the human touch of your QA team is still hugely important. The best solution is to support the QA team with targeted analytics. This will help reduce the heavy lifting and allow them to focus their efforts on extremes that would benefit from a deep dive.

With more time, watch how your QA and Performance Improvement efforts become more efficient, effective, and employee-first. It’s the best of both worlds.

Getting the Balance Right

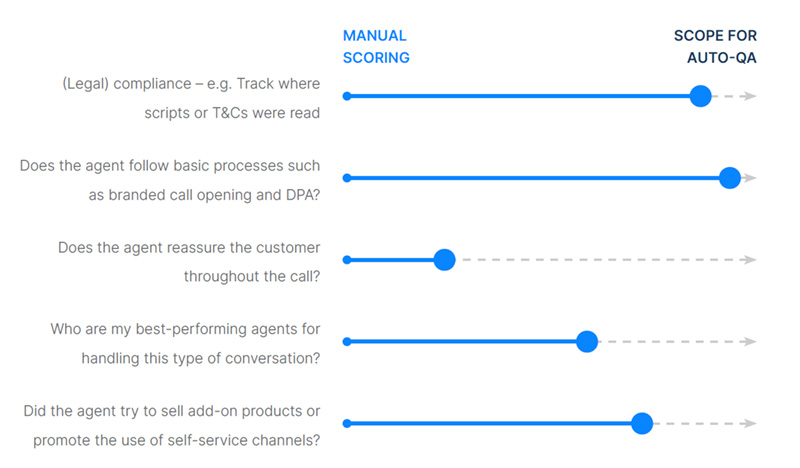

So how do we do that? The table below demonstrates how to build your own road map for transforming your QA process, moving from fully manual operations to a manual/automated hybrid that makes the best use of both, without trying to tackle areas that will be hard to deliver.

Deployment Planning

So now what?

However, your success with any Auto-QA platform will depend on how effective you are at embedding the technology into your existing business processes. Many organizations have attempted to make the leap, only for the technology to become a shiny piece of software left on the virtual shelf to decay.

The way to avoid this is through planning – planning how to operationalize the technology today and planning for how it may look in the future. Understanding which of the three available operating models will work best for you is a crucial success factor in this respect.

Choosing the Best of Both Worlds

Yes, the benefits of Auto-QA are vast, but the human touch of your QA team is still hugely important. The best solution is to support the QA team with targeted analytics. This will help reduce the heavy lifting and allow them to focus their efforts on extremes that would benefit from a deep dive. With more time, watch how your QA and Performance Improvement efforts become more efficient, effective and employee-first. It’s the best of both worlds.

The Three Operating Models

QA CENTRIC

A centralized approach to automated score analysis that is focused on business process, product and training improvements.

What does this look like?

- The QA team uses Auto-QA results and topics “behind the scenes.”

- The results are not directly shared with team leaders or agents for individual calls.

- The Quality Framework that the agents are evaluated against may change very little.

- Actions are focused on areas where team performance can be improved.

When would you adopt this?

- You’re new to Analytics and need time to develop the Auto-QA scorecard to the level of accuracy before moving on to model 2 or 3 (most organizations)

- You haven’t had a Quality Framework in place before and need to learn what it should include.

- Your Quality Framework is heavily focused on soft skills assessment rather than compliance and doesn’t lend itself to the introduction of hard measures.

TEAM LEADER CENTRIC

This model exposes individual QA results to support team leaders in personalized agent quality performance evaluation.

What does this look like?

- Auto-QA results are produced for each call, alongside the manual QA scorecard.

- The manual QA scorecard is adapted to remove those elements now part of the Auto-QA.

- Agents and team leaders review the Auto-QA results as part of their standard quality review meetings with full visibility of the Auto-QA results.

- There is limited exposure of Auto-QA results across the operation. Agents get feedback on their own performance.

- Agent remuneration and reward may include some elements that are driven by Auto-QA.

When would you adopt this?

- You have confidence in your Analytics query and topic accuracy. And meetings focus more on actions to improve rather than discussing failings in the data!

- You have sufficient, well-defined elements within your Quality Framework that make it suitable for a distinct Auto-QA scorecard element.

- Your team leaders have a number of coaching and development options available to them that can assist agents to address improvement actions.

- You are building an effective reporting model, even if Auto-QA is not fully embedded as yet.

AGENT CENTRIC

This is a more advanced model where AutoQA is fully embedded in the performance model and at least some of the incentives and drivers outlined below are deployed.

What does this look like?

- Agents are fully exposed through their own dashboard for Auto-QA.

- Other teams’ or team members’ data are shown in an aggregated, anonymized format.

- Relative performance in each area of Auto-QA is shown along with short-, medium- and longer-term trends e.g., “You’re in the top quartile for Empathy this month.”

- Targets for Auto-QA elements can be set.

- Competitive inter- or cross-team targets can be set, typically for additional bonuses or prizes rather than basic remuneration.

- Agent remuneration and reward will include a number of elements that are driven by Auto-QA.

When would you adopt this?

Your Analytics query and topic accuracy rates are high across all the elements you have embedded in the Auto-QA process.

Your team leaders have a robust Learning Framework available to them that provides a tailored coaching and development programme for each agent to systematically drive long-term performance goals.

You have a QA and Analytics team that is well established with strong processes for managing and handling operational feedback.

You have an effective framework for reporting which meets the needs of stakeholders across the business, and Analytics is embedded rather than a separate entity.

By creating our three operating models, we’ve formed a three-step conversation roadmap for any contact center. Though the model you start with depends on where you are in your analytic maturity, the resolution you can come to at the end is always the same – a fruitful, effective model that combines human expertise and AI to maximize efficiency.

Benjamin Franklin said:

“By failing to prepare, you are preparing to fail.”

So, what can you do to ensure your project is successful?

Here are our three main pointers:

- Focus on your use case, not the technology. Start with Auto-QA to prove the technologies capabilities and then look to expand into different areas.

- Don’t try to automate everything – little wins can generate big results.

- Decide on your initial approach prior to buying the technology; it will make everything easier.

About EvaluAgent:

At EvaluAgent, we believe that Every Conversation Counts. For over 10 years, EvaluAgent has been helping contact centers across the globe to dramatically improve their Quality Assurance capabilities through our award-winning AI-powered Quality Assurance and Performance Improvement platform. For more information, go to www.evaluagent.com.

If you want to learn more about using Auto-QA to reveal unseen insights and automate compliance checks, visit https://www.evaluagent.com/platform/auto-qa/ to speak to an expert today.